Cutting-Edge Texturing for VFX: Substance at Double Negative

Cutting-Edge Texturing for VFX: Substance at Double Negative

After over a year of collaboration, we are thrilled to share that Double Negative has been developing innovative texturing workflows using Substance for feature film VFX! In this post, Marc Austin (Lead TD Generalist at Double Negative) tells us about the texturing pipeline he built for one of the central assets of the recently released Assassin’s Creed movie.

Tell us about your role at Double Negative.

As a generalist here at Double Negative, my day-to-day tasks can change quite a lot between shows. I work mainly on hero or complex build tasks, my specialty being surfacing. I also work closely with lighting and pipeline departments to set up sequences.

Why did you choose to use Substance Designer for the Assassin’s Creed movie?

We always keep an eye on promising new technologies and products here at Double Negative. Late in 2015, we were looking into how to approach the complex surfacing challenge of the Animus (The device Cal uses to re-live his ancestors’ memories).

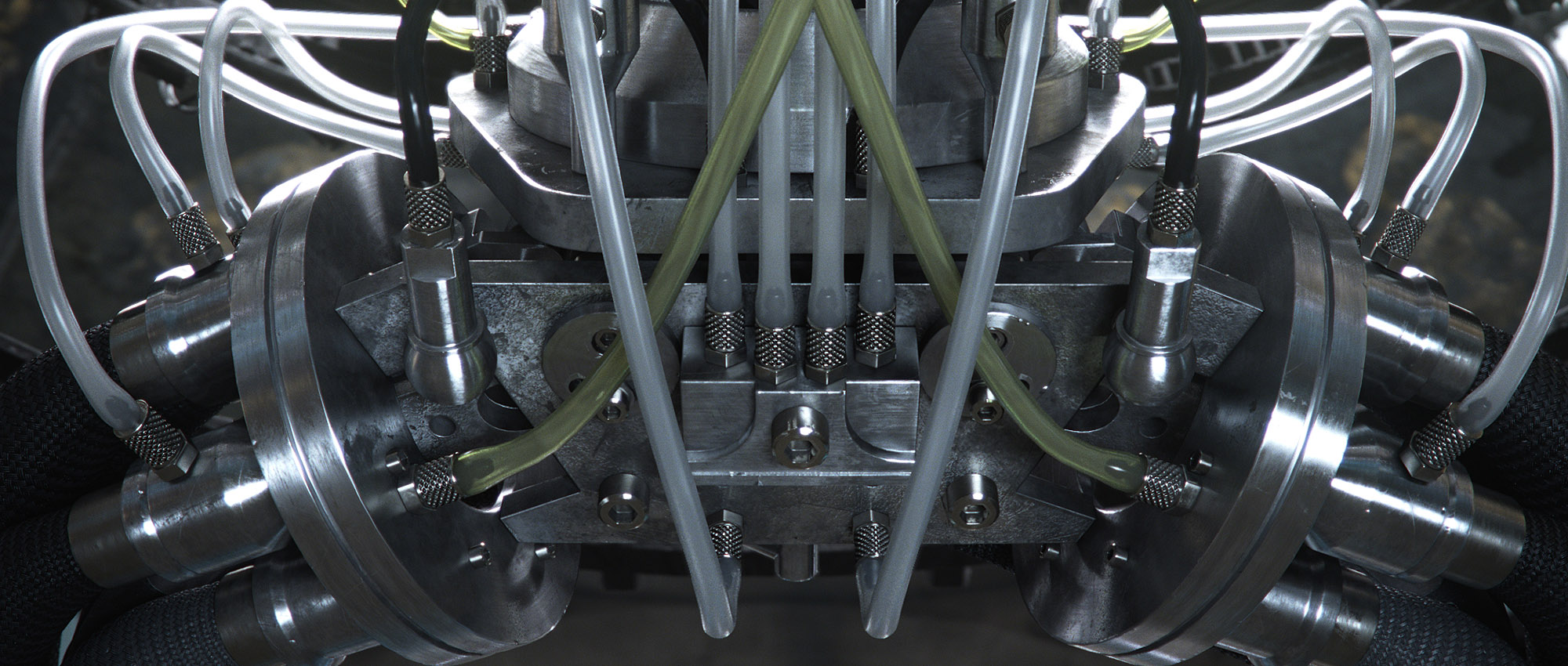

The concept model of the Animus was made of thousands of mechanical links, pipes, and pneumatic muscles. The modeling task was daunting as all these mechanisms had to be fully articulated with a wide range of possible poses.

In a normal build pipeline, a model sign-off would trigger a surfacing task to paint and develop the look of the asset for shots.

However, for the Animus, we needed a way to surface the asset in parallel to meet the deadline. The modeling and rigging were going to be in constant flux during the show and surfacing couldn’t wait for a finished model.

During this early stage, Substance Designer was showcasing features such as Iray integration and the pixel processor, which opened up possibilities for surfacing the Animus in Substance Designer.

With some testing, we came up with a methodology of batch baking substances per UV tile (UDIMs). Using parametric substances we could design a surfacing pipeline which could create a new set of textures far quicker than hand painting. We could re-run these Substances each time there was a modeling update. Coupled with the types of materials used on the Animus (metals and plastics) Substance Designer seemed like a perfect fit.

As a generalist here at Double Negative, my day-to-day tasks can change quite a lot between shows. I work mainly on hero or complex build tasks, my specialty being surfacing. I also work closely with lighting and pipeline departments to set up sequences.

Why did you choose to use Substance Designer for the Assassin’s Creed movie?

We always keep an eye on promising new technologies and products here at Double Negative. Late in 2015, we were looking into how to approach the complex surfacing challenge of the Animus (The device Cal uses to re-live his ancestors’ memories).

The concept model of the Animus was made of thousands of mechanical links, pipes, and pneumatic muscles. The modeling task was daunting as all these mechanisms had to be fully articulated with a wide range of possible poses.

In a normal build pipeline, a model sign-off would trigger a surfacing task to paint and develop the look of the asset for shots.

However, for the Animus, we needed a way to surface the asset in parallel to meet the deadline. The modeling and rigging were going to be in constant flux during the show and surfacing couldn’t wait for a finished model.

During this early stage, Substance Designer was showcasing features such as Iray integration and the pixel processor, which opened up possibilities for surfacing the Animus in Substance Designer.

With some testing, we came up with a methodology of batch baking substances per UV tile (UDIMs). Using parametric substances we could design a surfacing pipeline which could create a new set of textures far quicker than hand painting. We could re-run these Substances each time there was a modeling update. Coupled with the types of materials used on the Animus (metals and plastics) Substance Designer seemed like a perfect fit.

You can see the Animus in action at 0:48 and 1:39 in the official trailer

Can you walk us through the texturing pipeline you developed for the Animus?

The basic stages we settled on focus largely on working around the absence of UDIMs in Substance.

Model preparation

The Animus was prepared in Maya so we could preview small parts of the model in Substance Designer. We subdivided the model and exported the geometry based on which UV tile the geometry occupied, with 200+ UDIMs in total.

Mesh Info Baking

We used Clarisse from Isotropix to bake the mesh data maps. We chose Clarisse since it was easier to develop custom shaders for and fit inside our publishing pipe.

Substance Designer

A Substance per material was created; these ranged from plastics and metal to rust and dirt. Our propriety shaders needed different inputs than native substance shaders, so all the outputs were converted to Double Negative friendly outputs when we baked the substances.

Baking script

We made a batch baking script which iterated through each Substance changing the inputs and random seeds per UDIM. The result was a texture UDIM sequence per substance material.

Nuke clean up

Nuke was used to simplify the number of outputs our render used. Since each UDIM could have multiple materials, we needed to combine the textures to optimize our texture IO. Material ID masks generated in Katana were used to mask between the substances. These material ID maps matched the shader assignments that Katana used.

Render

These maps were used unaltered as the inputs to our shaders inside of Katana and Clarisse.

The basic stages we settled on focus largely on working around the absence of UDIMs in Substance.

Model preparation

The Animus was prepared in Maya so we could preview small parts of the model in Substance Designer. We subdivided the model and exported the geometry based on which UV tile the geometry occupied, with 200+ UDIMs in total.

Mesh Info Baking

We used Clarisse from Isotropix to bake the mesh data maps. We chose Clarisse since it was easier to develop custom shaders for and fit inside our publishing pipe.

Substance Designer

A Substance per material was created; these ranged from plastics and metal to rust and dirt. Our propriety shaders needed different inputs than native substance shaders, so all the outputs were converted to Double Negative friendly outputs when we baked the substances.

Baking script

We made a batch baking script which iterated through each Substance changing the inputs and random seeds per UDIM. The result was a texture UDIM sequence per substance material.

Nuke clean up

Nuke was used to simplify the number of outputs our render used. Since each UDIM could have multiple materials, we needed to combine the textures to optimize our texture IO. Material ID masks generated in Katana were used to mask between the substances. These material ID maps matched the shader assignments that Katana used.

Render

These maps were used unaltered as the inputs to our shaders inside of Katana and Clarisse.

Although this was a new tool to work with in your field, could you tell us what was actually easy?

Matching the materials between the Substance previews and our shaders was very easy. Our shaders use the same underlying maths so a direct conversion was possible. This enabled us to use Substance Designer’s viewport as a very accurate preview tool so few or no adjustments were needed in our renders.

Making custom nodes was also easy to implement. An example of one set of nodes were cylindrical and spherical mappers, so all of the mechanical parts of the Animus were textured without seams.

All the textures for the Animus were procedural; no bitmap textures were used. It was surprising how quickly I could match onset references using a combination of shipped Substance Designer nodes and custom ones I made.

And what was hard? What solutions did you find?

Working around the lack of UDIMs in Substance Designer was the biggest challenge. Creating the batch baking methodology meant a large data set needed to be managed and iterated over each time a model update was made.

We have made good progress automating a lot of these steps since we finished the Animus. Hopefully, in the future, it will be easier and quicker to do updates.

Matching the materials between the Substance previews and our shaders was very easy. Our shaders use the same underlying maths so a direct conversion was possible. This enabled us to use Substance Designer’s viewport as a very accurate preview tool so few or no adjustments were needed in our renders.

Making custom nodes was also easy to implement. An example of one set of nodes were cylindrical and spherical mappers, so all of the mechanical parts of the Animus were textured without seams.

All the textures for the Animus were procedural; no bitmap textures were used. It was surprising how quickly I could match onset references using a combination of shipped Substance Designer nodes and custom ones I made.

And what was hard? What solutions did you find?

Working around the lack of UDIMs in Substance Designer was the biggest challenge. Creating the batch baking methodology meant a large data set needed to be managed and iterated over each time a model update was made.

We have made good progress automating a lot of these steps since we finished the Animus. Hopefully, in the future, it will be easier and quicker to do updates.

Given what you learned, and that updates have been released since then (making the tools more suited for VFX), what would you look forward to doing next with the Substance toolset?

I would love to use Substance Painter; I can imagine a massive use case for it, but until UDIMs are implemented managing hero assets is too difficult. We are, however, using it for smaller assets with promising results.

I’m currently developing a new suite of tools which will form the framework for far more complex Substances. An example of one of the tools is a dripping rust Substance which will always flow over the model due to gravity regardless of UV orientation or scale.

There is obviously a long list of feature requests coming from the VFX world, and users can be reassured that we are working on them 🙂 What would be your top 3?

UDIMs – It’s a must for VFX. I would love to see this supported in all of Allegorithmic’s products.

Full API – Scripting inside Substance Designer is limited. Having the external API is really nice but a fully featured API inside Substance Designer would let us do so much more.

LUTs and advanced viewer – Not as important as the first two, but having a custom LUT would mean surfacing could be authored directly from Substance Designer. Additional features for the viewer like custom back plates, A/B wipes, image history and temporarily viewing a single channel would be very nice to have.

I would love to use Substance Painter; I can imagine a massive use case for it, but until UDIMs are implemented managing hero assets is too difficult. We are, however, using it for smaller assets with promising results.

I’m currently developing a new suite of tools which will form the framework for far more complex Substances. An example of one of the tools is a dripping rust Substance which will always flow over the model due to gravity regardless of UV orientation or scale.

There is obviously a long list of feature requests coming from the VFX world, and users can be reassured that we are working on them 🙂 What would be your top 3?

UDIMs – It’s a must for VFX. I would love to see this supported in all of Allegorithmic’s products.

Full API – Scripting inside Substance Designer is limited. Having the external API is really nice but a fully featured API inside Substance Designer would let us do so much more.

LUTs and advanced viewer – Not as important as the first two, but having a custom LUT would mean surfacing could be authored directly from Substance Designer. Additional features for the viewer like custom back plates, A/B wipes, image history and temporarily viewing a single channel would be very nice to have.

Anything you would like to add?

Working with Substance has been a joy so far and it helped massively for the Animus. With the addition of a few more features, I can definitely see VFX studios using Substance as a backbone of their surfacing pipelines in the future.

We would like to give a special thanks to Marc and the team at Double Negative London for taking the time to share their experience with Substance and look forward to our ongoing collaboration. You can expect more news from the VFX/Animation side of things in 2017 🙂

Working with Substance has been a joy so far and it helped massively for the Animus. With the addition of a few more features, I can definitely see VFX studios using Substance as a backbone of their surfacing pipelines in the future.

We would like to give a special thanks to Marc and the team at Double Negative London for taking the time to share their experience with Substance and look forward to our ongoing collaboration. You can expect more news from the VFX/Animation side of things in 2017 🙂